As the aspiring data analysts and scientists, we have been reading on every second web link that probability lies as the bedrock of the data science. However, we often fail to understand why we should study probability and how it makes relevance. In this article and some other following articles, I have tried to put together the Probability concepts essential for Data Science Applications.

Why Probability

Aim of Probability is to quantify the uncertainty of events. It is used to make decisions like how reliable a certain piece of information is or what is the chance of a specific situation happening. It is used to organize data into meaningful clusters.

Probability

Before we dive deep into probability concepts, let us first try to understand what probability is. Probability is the likelihood that something will happen. It can also be called as relative average frequency of certain event’s occurrence if a large number of independent trials are conducted. Probability does not talk about when a certain event would occur but it talks about how often it would happen.

Closely associated to Probability is the thought of likeliness of events. As the cliché goes, if the coin is fair, either side of coin is equally likely. Or if the slot machine in a casino is tampered then winning is not as likely as losing.

One other factor related to Probability is independence of events. The occurrence of an event may or may not be dependent on previous event. The probability changes if there is an event dependency. If it changes based on previous events or on some other event then Conditional Probability comes into picture which quantifies the certainty of an event given that something has happened. This is like what is the probability that a patient is suffering from kidney failure given that he has severe stomach ache. There is an interesting theorem based on Conditional Probability. It is called as Bayes Theorem. Its goal is to relate P (A|B) to P (B|A). And if we have partitions of the given event then this idea can be extended to Law of Total Probability which is nothing but finding probability of a particular outcome given multiple distinct events occur.

The Laws

The Law of Averages states that everything averages out over time. It is just a belief that eventually a specific event will occur if it hasn’t happened yet.

Then there is another law called Law of Large Numbers which states that the average of the results obtained from large number of trials will be close to the expected value of experiment trials.

To simplify this further, let’s say Cafe Zenia launched a new Frapuccino. The purchase probability of this new drink is 50% i.e. either the coffee is bought by the customer or it is not. Every such event of purchase is independent from each other and we can keep track for multiple hours in a day or months to see how many of this Frapuccino are sold. Let’s say that from observation we noticed that in every 1000 customers, 48 of them bought the new Frapuccino. This means the probability over long run of buying new Frapuccino is 0.048. Now on a certain day the owner of Zenia cafe notices that during the first half set of customers nobody has bought the Frapuccino and he believes that now customers are likely to purchase it as event is due to happen as per the law of averages. However, event is never due to happen and as per law of large numbers the purchase would be eventually after large number of trials/customers would equal to the obtained probability of 0.048. Thus the law of large number states that in a series of random events repeating over and over, the average of results for an outcome will converge to its expected value. Expected value is nothing but weighted sum of all the possible outcomes. In the example above the expected value would be 0.048 or 4.8%.

Random Variables

One of the essential concepts of probability theory is Random Variables. A random variable is a variable which takes on different values with given probabilities. These variables are actually functions which map outcomes to a space that contains real values. Random Variables allow us to talk about probability of derived events and not just the basic ones. To elaborate this, let’s say we roll a dice. The outcome can be 1, 2, 3, 4, 5 or 6 with probability 1/6 each for a fair dice. But we have to suppose capture an event which is based on the outcomes space {1, 2, 3, 4, 5 or 6} then we can use random variables. For example, we want to consider an event space of even or odd number on dice thrown. Then we can define a random variable which takes a value 1 if the outcome is odd and takes 0 if the outcome is even. Such binary random variables are known as Indicator Variables, indicating if certain event happened or not.

As the name goes, variable is supposed to take multiple values. And random variable has an associated probability that the variable would take a specific value with a specific probability. Thus, the probability distribution specifies the probability for a random variable to take a particular value. Often the case is that we are considering multiple random variables to define an event of our interest. Distributions having more than one variable at a time are called joint distributions as the probability is determined by all the variables considered jointly. And in such a case if we sum out all the random variables from the distribution except one, then we obtain a distribution of that single random variable. This is referred as marginal probability distribution. Conditional Distributions as the name suggests specifies the distribution of a random variable when the value of other random variable is known. Distributions are said to be independent if distribution of one random variable doesn’t change on learning the value of another random variable. And Distributions are conditionally independent if we know the values of a random variable and then some other random variables exhibit independence in their distributions.

Chain Rule and Bayes Rule

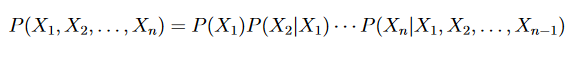

These rules relate to joint distributions and conditional independence across variables. Using Chain rule we can obtain joint distribution of multiple variables as

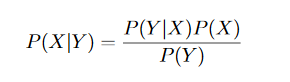

The Bayes Rule is for computing the conditional probability P (X|Y) from P (Y|X).

Distribution Types

The probability distributions are divided into 2 classes: Discrete and Continuous. The discrete distribution is called probability mass function as it divides a unit mass or unit probability and places it on different values taken by the random variable. The continuous random variable can take infinitely many different values and hence probability at any specific point is 0. In such a case we define probability density function where the probability is calculated by integrating over a range rather than on a single point.

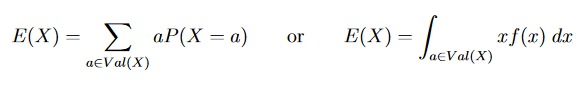

Expectation is a mean value of a random variable.

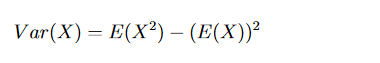

Variance of a distribution is a measure of the spread of a distribution.

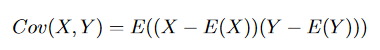

Co-variance is the measure of how closely are two random variables related.

(Reference & Source: https://see.stanford.edu/materials/aimlcs229/cs229-prob.pdf)

Hello just wanted to give you a brief heads up and let you know a few of the images aren’t loading properly.

I’m not sure why but I think its a linking issue.

I’ve tried it in two different web browsers and both show the same outcome.

Thank you for informing. I will work to fix this problem.