With the advancement in the studies like natural language processing, artificial intelligence and engineering, today we have reached a stage where experts from all these fields are willing to come together to apply their techniques in almost every business field. Because of such expansion, we are now able to collect as well as store extensive data generated from every other system. An open source Nutch project started by Doug Cutting marks the beginning of creating a system that stores huge petabytes of data.

Although the ‘big data’, the buzz word, came into hype recently, a large amount of data collection and storage did exist since long back. Companies like Belcan provided analytics service to aerospace, defense and automotive industries tracking and working on big data. But as we now wish that all the businesses exploit the knowledge derived from the historical data, there are new technologies stepping into the market that have reduced the complexity of installing systems suitable for utilizing big data.

Cloudera’s Hadoop Ecosystem is one such system that facilitates businesses to make a shift from spreadsheets and perform analysis over much wider datasets to the extent of forecasting or predicting their business growth.

Jay Kreps, Principal Engineer of LinkedIn says-

“Hadoop is a key ingredient in allowing LinkedIn to build many of our most computationally difficult features, allowing us to harness our incredible data about the professional world for our users.”

Hadoop has many tools that talk with one another for effective big data management leading to success of industries through analytics.

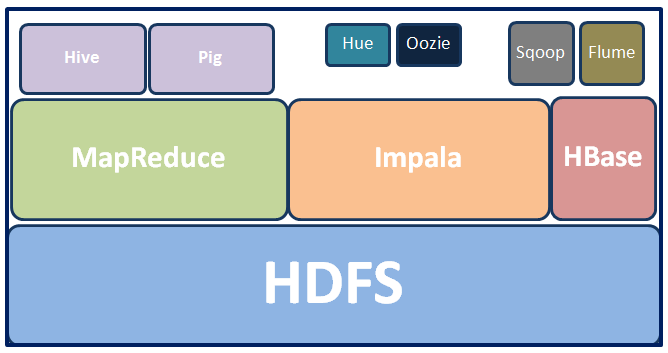

Hadoop ecosystem has 2 essential components. For storage of huge volume and variety of data, there is a Hadoop Distributed File system which is basically a cluster formed from multiple nodes. On top of this storage file system, there is a Map Reducer which facilitates faster mapping and analyzing user specified data from a large volume. Mappers organize the data in key value pair and Reducer shuffles and sorts these key value pairs. These 2 blocks from Hadoop ecosystem are the building blocks.

On the top of map reducer block, other systems like Hive and Pig were developed as independent projects which were later made open source. Hive, optimized for running large batch processing blocks, allows user to fire SQL over Map Reduce block which connects with HDFS to access data. On similar lines Pig uses simple scripts to fetch data from HDFS through MapReduce. MapReduce takes time to report back on large amount of data. Thus to reduce this time, querying directly on HDFS seemed essential. This was the basis of another independent project called Impala. Impala queries directly HDFS using SQL. Impala is optimized for low latency queries indicating that Impala queries run way faster than Hive.

Another important system used by many is Sqoop. Its purpose is to take data from relational databases like SQL Server and add them to HDFS as delimited files. Flume is also used for similar purpose of adding data from external systems to HDFS.

Apart from these, there are some more important tools of Hadoop ecosystem. HBase system is a real time database developed on top of HDFS. Hue is a graphical front end of the cluster. Oozie is a workflow manager. And there is a machine learning library called Mahout.

Owing to so many effective independent projects clubbed together, Hadoop ecosystem is one of the best toolset for Big Data Analytics available today.